Do LLMs Just Make Good Websites Better?

There’s excitement everywhere about ChatGPT, Perplexity, Claude and all the other large language models (LLMs) transforming online business. But despite the hype, LLMs aren’t magic wands. You can’t just bolt on AI and hope your eCommerce site becomes smarter overnight

LLMs don’t fix broken websites – they amplify what’s already there.

In short, GenAI doesn’t make bad websites good. It can make good websites great. The difference comes down to the quality of your site’s foundation and data.

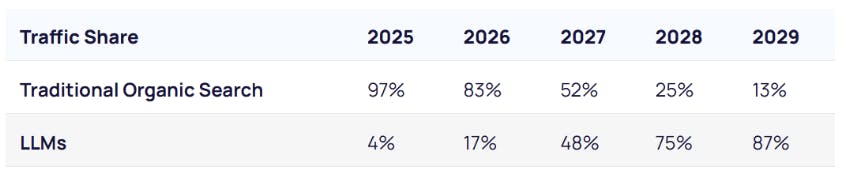

This matters more than ever: LLM traffic is projected to eclipse traditional organic traffic by 2028, driving over 75% of search-related revenue (% breakdown below reported by Semrush). The future of product discovery is conversational, and if your site isn’t ready, you risk being left behind.

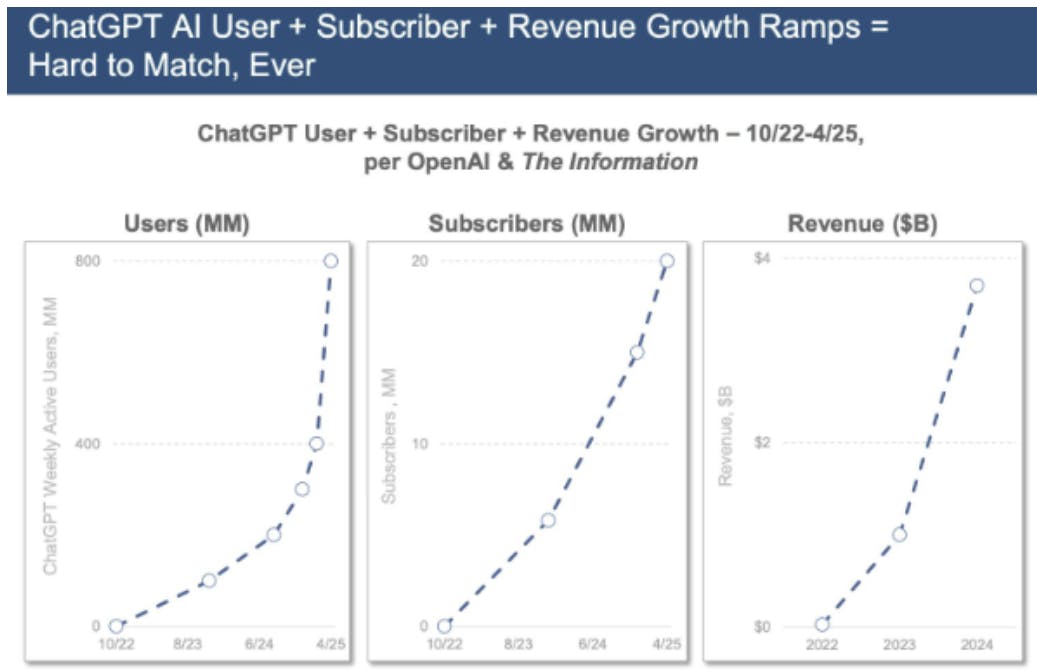

ChatGPT user growth has shattered records - a surge unlike anything we’ve seen in the digital era. It can not be ignored.

Traditional search engines scan for keywords and page rankings. LLMs, on the other hand, build a deep model of your site’s meaning: your content, structure, product taxonomy, tone, and the broader web context for your business.

It’s not about content volume or blog frequency. LLMs reward clarity, structure, and usefulness.

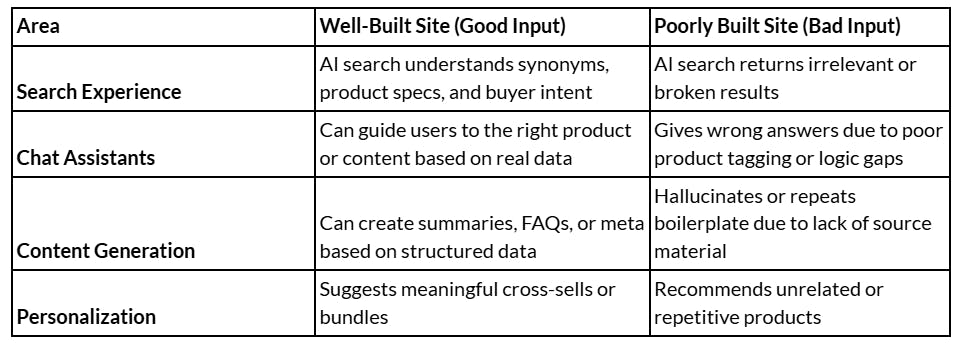

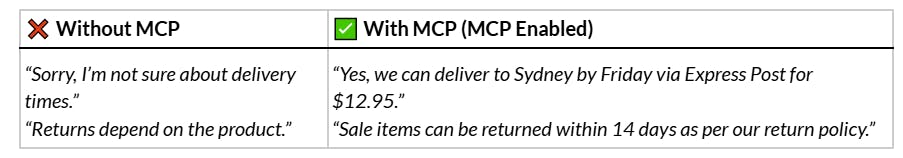

To illustrate what happens when a well-built site meets AI tools - versus what happens when it doesn't - consider the differences below:

In short: AI reflects the strengths - or weaknesses - of your product data, taxonomy, and structure.

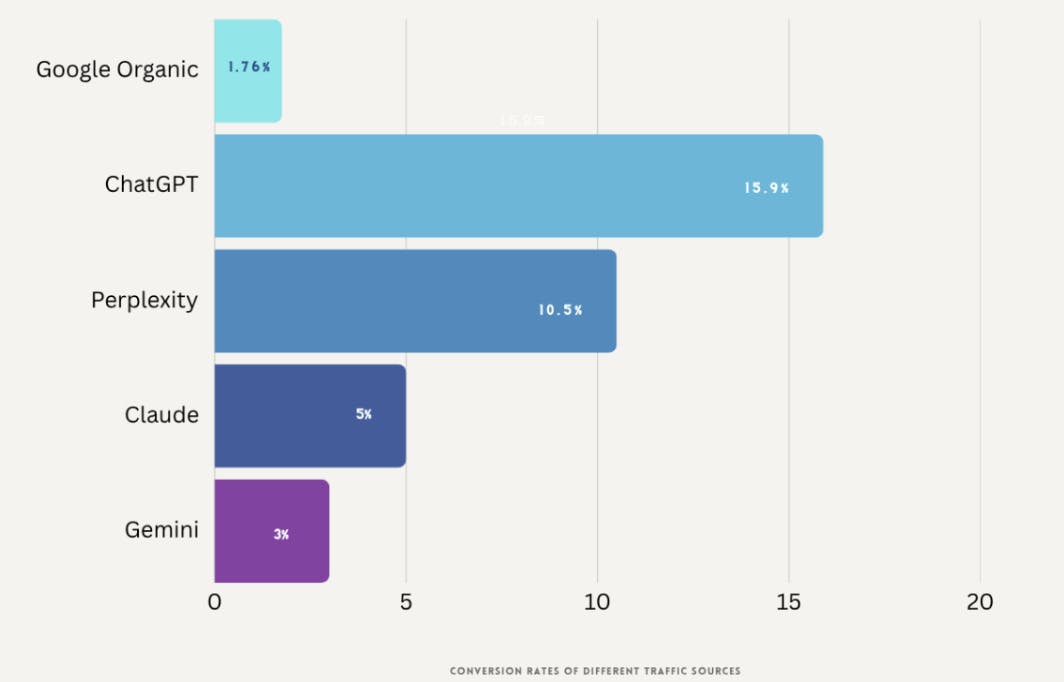

And the payoff can be big: studies show traffic referred by ChatGPT converts up to 10x higher than traditional organic search (15.9% conversion rate for ChatGPT vs ~1.76% for Google). These users arrive with high intent and strong engagement.

LLMs Reward Structure and Clarity

To work well, LLMs need:

- Structured content: clean product hierarchies and consistent attribute sets

- Semantic clarity: meaningful headings, clear relationships between sections

- Trust signals: cross-linking to authoritative, consistent sources (e.g., FAQs, reviews)

- Rich metadata: schema.org markup, OpenGraph tags, structured product and review data

If your product descriptions are complete, your taxonomy logical, and your markup correct, LLMs can dramatically improve Q&A accuracy, product discovery, and customer engagement. But if your site is full of vague terms, inconsistent data, or outdated information, LLMs will echo that confusion.

Example

Imagine two sites selling shoes:

Site A lists all sizes and colours, includes reviews marked up with schema.org, and maintains consistent branding. LLM-driven tools can confidently answer nuanced customer questions.

Site B says “variety of sizes available,” has inconsistent imagery, and outdated returns info. LLMs will reflect that ambiguity

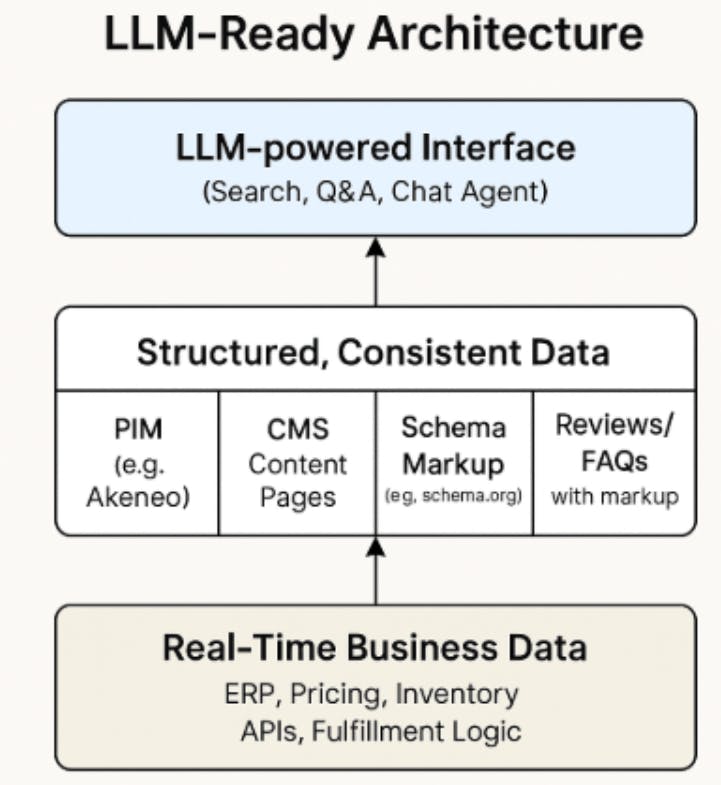

PIM – The Foundation for LLM Readiness

Managing product data at scale - across thousands of SKUs and multiple channels - is tough. That’s where a Product Information Management (PIM) system such as Akeneo helps.

A strong PIM enables:

- Centralised descriptions, attributes, images, and taxonomy

- Clean data governance and automated enrichment

- Rich metadata for LLMs and search engines (e.g., schema.org)

By investing in a modern PIM, you ensure your structured product data is ready for both human users and AI-powered interfaces.

Bridging the Real-Time Data Gap

LLMs aren’t connected to your ERP, CRM, or OMS - yet. They can’t answer these questions out of the box:

- “What’s the price delivered to Sydney by Friday?”

- “Is this in stock near me?”

- “Where is my order?”

- “Are refunds available on sale items?”

But they can surface real-time answers if you expose the right data. To support this:

- Connect your systems of record: expose pricing, inventory, and policies via APIs, feeds, or semantic markup

- Use structured outputs: REST APIs or middleware that reflect current business log

Provide fallback logic: ensure LLMs say “I don’t know” instead of guessing or hallucinating

Locking your business logic in PDFs or legacy code makes it invisible to both customers and AI.

Feeding Real-Time Context with MCPs

As eCommerce moves toward conversational and AI-driven customer journeys, one of the key enablers of meaningful interactions is the Model Context Protocol (MCP). MCPs allow businesses to feed structured, real-time context- like product availability, pricing, policy rules, and brand tone - directly into large language models (LLMs) such as ChatGPT. Think of MCP as an “API for AI” in the way that it provides responses that are much easier and less ambiguous for the GenAI to consume and present to customers.

Without this context, LLMs operate on incomplete or outdated information, often leading to irrelevant responses or missed opportunities. By implementing MCPs, businesses ensure that AI-powered experiences reflect live data and brand-specific logic, enabling more accurate search results, trustworthy answers, and intent-driven guidance.

The result is a dramatically improved customer experience. Shoppers can ask complex, personalised questions - such as delivery options, compatibility details, or bundling rules and receive reliable, context-aware responses. MCPs also pave the way for emerging use cases like agentic shopping, where AI agents make decisions on behalf of customers. With structured context delivered through MCPs, LLMs evolve from static content tools into real-time, on-brand digital concierges - reducing friction, boosting trust, and increasing conversions across the funnel.

Customer Query Example

"Can I get these shoes delivered to Sydney by Friday, and what's your return policy on sale items?"

MCPs feed live data into LLMs - so responses reflect your actual stock, shipping options, and business rules, not just static content.

LLMs Are Your New Digital Concierge

Think of LLMs as a front-of-house concierge: helpful, conversational, and fast - but entirely dependent on your backend systems. If your APIs are slow or unavailable, your digital concierge stalls. If product data is inconsistent, it gives wrong answers or misses upsell opportunities.

Your site’s backend reliability directly impacts the quality of the LLM experience.

Bonus: AI-referred traffic is starting to emerge and in some cases, it’s trackable. Tools like ChatGPT or Perplexity may pass identifiable referral data or custom UTM tags, depending on how users access your site. While not yet consistent, it’s worth monitoring GA4 for patterns linked to AI-driven discovery.

LLMs Go Beyond SEO

LLMs build on good SEO practices - clarity, structure, and relevance - but add intent and context. This is part of an emerging practice known as Generative Engine Optimisation (GEO).

GEO focuses on:

- Structuring content as direct answers (Q&A style)

- Optimizing for conversational tone and relevance (especially for ChatGPT)

- Targeting AI visibility, not just traditional click-throughs

To future-proof your site, invest in:

- Structured data & markup (e.g., schema.org, OpenGraph)

- Knowledge graphs that connect related products, attributes, and categories

- Clean, interconnected content with internal linking, FAQs, and third-party validation

These efforts benefit both your human users and your AI-powered interfaces.

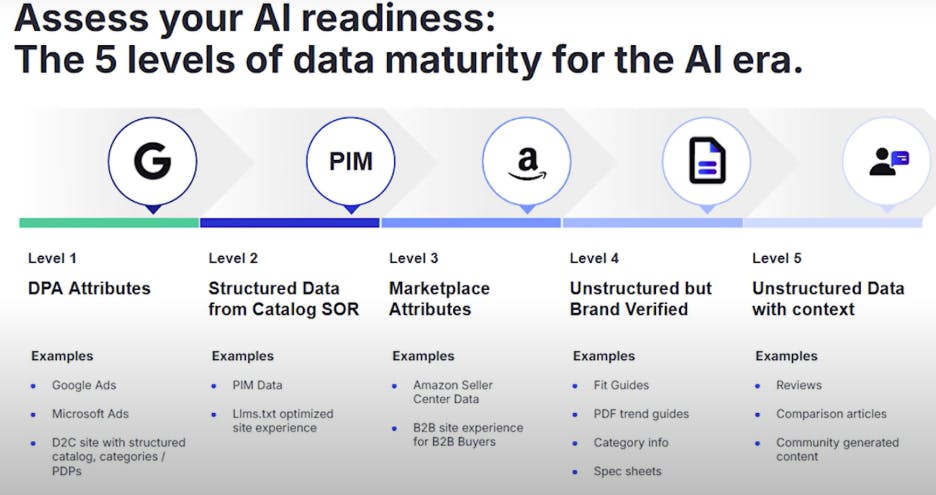

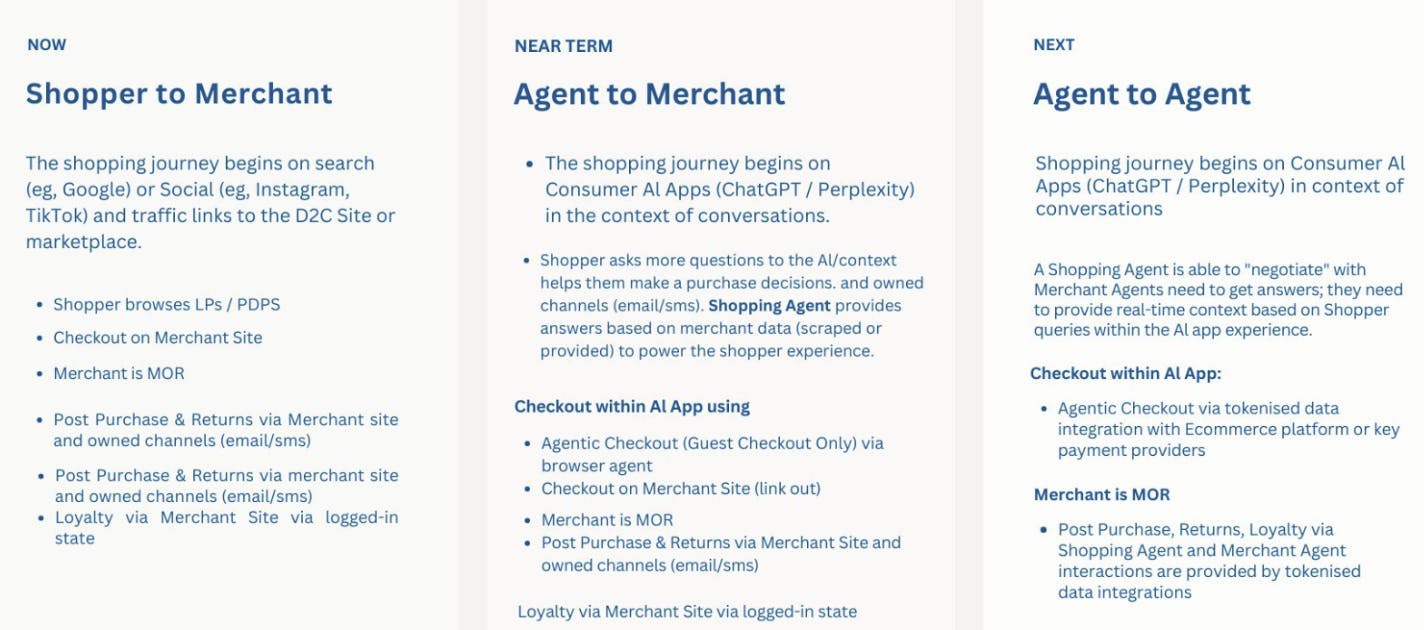

Agentic Shopping: The Next Frontier for Catalog Quality

The rise of agentic shopping - where AI agents autonomously research, recommend, and purchase products on behalf of consumers - is reshaping how e-commerce catalogs must be built.

As highlighted in the recent CommerceNext panel “Breakout: From B2C to A2A — How Agentic Shopping Makes Catalog Quality More Important Than Ever” (July 8, 2025):

- AI agents act as proxies for shoppers and brands, requiring highly enriched, accurate, and multi-faceted product data to power new discovery and purchasing experiences.

- Traditional product feeds optimised for Google or Amazon are no longer enough. To meet AI demands, merchants must supply comprehensive structured attributes plus unstructured content like videos, reviews, and transcripts.

- Shopping queries driven by AI platforms (e.g., Perplexity) are growing rapidly, showing how consumer trust in agentic shopping is increasing.

- Companies like Revelyst adopt an end-user-first approach, delivering brand-aligned rich data to AI platforms to build trust and nuanced recommendations.

- Tools like Feedonomics support brands with tiered data maturity models, helping scale from basic product info to advanced brand-verified content.

- Data accuracy and freshness are paramount, as AI shopping platforms depend on merchant data for pricing, availability, and reviews to maintain trust.

The message is clear: commerce is evolving from direct-to-consumer (D2C) to agent-to-agent (A2A), powered by AI agents. To thrive, your catalog must be the foundational storefront-rich, accurate, and continuously optimised for AI.

Where to start - a practical checklist

Aligent has developed a 30-Point Checklist: How LLM-Ready Is Your Site Compared to Your Competitors?

To begin preparing your site for LLM and agentic shopping integration:

✅ Audit your content and product structure for meaning and clarity

✅ Use a robust PIM to centralise and govern product data

✅ Make product, pricing, and inventory data accessible via APIs or feeds

✅ Align brand messaging and policies across all channels

✅ Pilot an LLM use case (e.g., product search or Q&A) on one category

✅ Monitor performance and iterate based on user behaviour and LLM output

✅ Start tracking ChatGPT or Perplexity referral traffic using UTM parameters and GA4 reports

✅ Format some of your content as direct Q&A to increase citation potential in generative results

What you’ll get:

- A detailed audit of your product data structure, schema markup, and API accessibility

- A clear benchmark of where your site stands for AI discoverability

- A prioritized roadmap of quick wins you can implement today

Empowering websites with LLM-driven content, enriched catalogs, and tailored automation is now essential for brands looking to thrive and differentiate in the age of AI-enabled commerce. The brands that champion these innovations will play a pivotal role in maximising clients’ online growth and resilience as the digital landscape transforms.

Looking forward to discussing how we can help your brand capture, convert, and optimise for the AI era. Please reach out https://www.aligent.com.au/contact

Sources:

- Semrush, AI and LLMs Will Drive 75% of Organic Search Revenue by 2028, https://www.semrush.com/blog/ai-search-seo-traffic-study/

- Case Study: 6 Learnings About How Traffic from ChatGPT Converts

- CommerceNext, From B2C to A2A: Agentic Shopping and Catalog Quality, July 2025 https://www.youtube.com/watch?v=b_JBHNQa6bM

- Generative Engine Optimisation Guide (GEO) for ChatGPT, Perplexity, Gemini, Claude, Copilot

The Future of Search Value: Why LLMs will drive 75% of revenue by 2028 https://explodingtopics.com/blog/llm-search